I just want to make funny Pictures.

Hey, as long as you don’t try to

- Sell it

- Claim it’s yours

- Use it instead of hiring professionals if you’re a business

not too fussed.

Also don’t call yourself an engineer. You’re a prompt monkey.

So because I use chatgpt for help coding data analysis scripts, I am no longer a mechanical engineer?

No, you are a mechanical engineer that uses AI.

“Prompt Engineer” is a “real” job title

As long, as you don’t proclaim your proficiency in utilising generative AI as your only claim to the term. It’s fine.

I’d say that depends on how important data analysis is to the job of mechanical engineer, and the degree of help you get from chatgpt

I made my avatar with AI gen. Shit’s perfect for things like that.

Still would pay a real person to make something closer to what I imagine though. I mean … if I had money that is.

Use it instead of hiring professionals if you’re a business

Why wouldn’t you though?

Because then artists aren’t getting paid but you’re still using their art. The AI isn’t making art for you just because you typed a prompt in. It got everything it needs to do that from artists.

So it’s more of an ethical “someone somewhere is probably being plagiarized and that’s bad” thing and not really a business or pragmatic decision. I guess I can get that but can’t see many people following through with that.

Some people got mad at a podcast I follow because they use AI generated episode covers. Which is funny because they absolutely wouldn’t be paying an artist for that work, it’d just be the same cover, so not like they switched from paying someone to not paying them.

The issue is similar to using other people’s data for profit. It’s easy to not feel that’s the case because “it’s the AI that does that, not me.”

There’s a lot of concerns around it. Mine is that we have longer periods of style with minimal variety because of artist stagnation due to lack of financial backing. Though, this is for all gen AI as it depends on humans for progression, else it stagnates. People are already getting AI art fatigue because it feels like that old 2005–2015 Adobe Illustrato vector art everyone was doing, because it is. It was an incredibly popular and overused style back then, so itt’ brimming with it in comparison to other art styles it got from the internet. It already looks dated, but acceptable because it’s familiar to most. It depends on more artists progressing our art to be able to do the same. But it won’t do that as fast if art culture is slowed due to lack of support.

Because that’s a harm to society and economy.

It’s gutting entire swaths of middle-class careers, and funneling that income into the pockets of the wealthy.

If you’re a single-person startup using your own money and you can’t afford to hire someone else, sure. That’s ok until you can afford to hire someone else.

If you’re just using it for your personal hobbies and for fun, that’s probably ok

But if you’re contributing to unemployment and suppressed wages just to avoid payroll expenses, there is a guillotine with your name on it.Please don’t use the “but it creates jobs” argument.

Me shitting in the street also “creates jobs” because someone has to clean it.

It feels like you’re directing that at me, but I agree with you, so I’m not sure what tone that was written in

I think what matters if you would’ve otherwise hired someone. Otherwise I can’t see it making any impact.

And in a lot of cases you would’ve paid for stock photo company anyway

I don’t agree:

Before if you chose not to hire someone, you’d be competing against better products from people who did hire someone. Hiring someone gave them a competitive advantage.

By removing the competitive advantage of hiring someone, you’re destroying an entire career path, harming the economy and society in general.

A lot of AI use I’m personally seeing is shit most wouldn’t spend money on or stuff where instead of paying for a stock photo they just generate shit and be done with it. Would they have ever paid someone to do the work and especially would anyone have agreed to do such small work that’d never pay anything reasonable, most likely no.

Before if you chose not to hire someone, you’d be competing against better products from people who did hire someone. Hiring someone gave them a competitive advantage.

I guess I don’t believe in quite as much in the invisible hand of capitalism. I rather think it’s a race to the bottom with companies buying some cheap slop to use on their webpage or whatever from a stock photo company and now people pay AI companies for it, if anyone. Can’t see the big impact of that sort of shit being replaced.

I also think capitalism is a race to the bottom, but I believe it is so because it subverts the value of labor. It’s shit like AI that makes it a race to the bottom.

shit most wouldn’t spend money on or stuff where instead of paying for a stock photo they just generate shit and be done with it.

Then pay for the stock photo. There, an artist is being paid for their work. But realistically the little stuff you’re talking about is the occupation of entire departments in megacorps.

Paying a stock photo “artist” or some AI slop “artist”, I’m not sure it makes any difference. The stuff AI generates is already so sloppy generic corporate bs that it’s hard to think of anyone deserving to paid anything for it anyway. It’s mimicking a horrid generic art style and a horrid generic art style like that isn’t owned by a particular artist anyway.

Why not sell it? Pet Rocks were sold.

Why not claim it’s yours? You wrote the prompt. See Pet Rocks above.

Not use it and instead hire a professional? That argument died with photography. Don’t take a photo, hire a painter!

So what if AI art is low quality. Not every product needs to be art.

Why not sell it? Pet Rocks were sold.

Why not claim it’s yours? You wrote the prompt. See Pet Rocks above.

Because, unlike pet rocks, AI generated art is often based on the work of real people without attribution or permission, let alone compensation.

Not use it and instead hire a professional? That argument died with photography. Don’t take a photo, hire a painter!

So what if AI art is low quality. Not every product needs to be art.

Do you know what solidarity is? Any clue at all?

Seems like the concept is completely alien to you, so here you go:

Do you know what solidarity is?

Do you know what a luddite is?

The simplest argument, supported by many painters and a section of the public, was that since photography was a mechanical device that involved physical and chemical procedures instead of human hand and spirit, it shouldn’t be considered an art form;

That a particular AI could have used copywrited work is a completely different argument than what was first stated.

Do you know what a false equivalence is? If not, just reread your own comment for a fucking perfect example.

I’m not wasting any more time and effort trying to explain the blindingly obvious to your willfully obtuse ass. Have the day you deserve.

Insults because you have no response.

Copyright and intellectual property is a lie cooked up by capitalists to edge indie creators out of the market.

True solidarity is making AI tools and freely sharing them with the world. Not all AIs are locked down by corporations.

Those capitalists support AI because it would allow them to further cut out all creators from the market. If you want solidarity, support artists against the AI being used to replace them.

Please explain to me how open source AI allows a corporation to cut creators out of the market.

Removed by mod

Yeah, nothing is more bougie than independent artists, most of whom are struggling to make ends meet even WITH a day job… 🙄

Says the person supporting capitalist corporations pushing AI as a replacement for real human artwork?

I agree, except you’re the one showing solidarity with the bourgeoisie.

AI is a too of the bourgeoisie to suppress the working classAh yes, how dare artists make $5 an hour instead of $0 while you pay a corporation a subscription fee instead. That’ll show those lazy artists that they’ve had it too good for too long.

Why not sell it? Because chances are the things it was trained off of were stolen in the first place and you have no right to claim them

Why not claim it’s yours? Because it is not, it is using the work of others, primarily without permission, to generate derivative work.

Not use it and hire a professional? If you use AI instead of an artist, you will never make anything new or compelling, AI cannot generate images without a stream of information to train off of. If we don’t have artists and replace them with AI, like dumbass investors and CEOs want, they will reach a point where it is AI training off AI and the well will be poisoned. Ai should be used simply as a tool to help with the creation of art if anything, using it to generate “new” artwork is a fundamentally doomed concept.

These articles feel like they aren’t really tied to my feelings about AI, frankly. I’m not really concerned about who is getting credited for the art that the AI creates, copyright laws just work to keep the companies trying to push for AI in power already. I am concerned that AI will be used to replace those who create the art and make it even harder for artists to succeed.

Copyright is being used more by companies to sue artists or even just individuals, than it is protecting your art.

It is an archaic grasp of control created by Disney to keep people from drawing a mouse with 2 round ears.

The help it supposedly provides you doesn’t come close to the amount of sacrifices you have to make to gain it.

I did say in the message that copyright is being used by companies more than artists. That’s why I wasn’t arguing about AI from a copyright angle because copyright doesn’t really help artists anyway.

They go over that, you should give them another read.

Could you please explain the point you’re making rather than expecting me to come to a conclusion reading the articles you linked?

I see nothing in them even after a re-read that would address the idea of AI being used to replace artists. If anything these articles are just confirming that those fears are well founded by reporting on examples such as corporations trying to get voice actors to sign away the rights to their own voices.

It should have been impossible to miss the first article linking to this companion blog post, and I meant to link this article instead of the second one.

To quote a funny meme: “I’m not doing homework for you. I have known you for 30 seconds and enjoyed none of them.”

You should make an argument and then back it up with sources, not cite sources, and expect them to make your point for you. Not everybody is going to come to the same conclusions as you, nor will they understand your intent.

This is a really complex subject and what I linked covers the issues thoroughly, better than I can.

Why not sell it? Pet Rocks were sold.

I didn’t know that pet rocks were made by breaking stolen statues and gluing googly eyes on them.

If your AI was trained entirely off work you had the rights to, sure. But it was not.

Why is it valid for you to be trained off of art you didn’t have rights to but not for an open source program running locally on my PC?

It would not be a copyright violation if you created a completely original super hero in the art style of Jack Kirby.

What’s the equivalence you’re trying to make? The program itself may be open source, but the images the model’s been trained on are copywritten.

And if you personally hand made it, sure. By nature, nothing an LLM makes is “completely original”

The equivalence is that nothing human artists make is “original” either. Everyone is influenced by what they have seen.

You are arguing that if you created a completely original comic book character in the art style of Jack Kirby, you committed a copyright violation.

Computers do not get “inspiration” or “influence”, and that’s quite literally not what I’m arguing. Maybe I’m just talking to an AI lol

Your argument is that you can get a request for a commission perhaps for a mascot ( create a new comic hero in the style of Jack Kirby) and it’s perfectly fine for you Google examples of Kirby’s style to create the picture.

But if a computer does the same it’s a copyright violation.

Nearly nobody is arguing against using AI for personal fun.

People are arguing against AI destroying entire career segments without providing benefit to society, especially to those displaced. People are arguing against how it so easily misleads people, especially when used as a learning aid. People are arguing against the enormous resource usage.

There’s also the fact that it’s an ecological disaster when it comes to both carbon emissions and using up potable water.

That’s why, its always good to run them locally(if you use them for fun)

That’s almost certainly more wasteful. The machines they run them on are going to be far more efficient.

Running it locally is better because of all the other data mining that goes along with capitalism

But usually you don’t cool your PC with Freshwater. That’s something a lot of datacenters do.

That’s true.

But in terms of power/emissions, data centers are far far better. The waste of potable water could be addressed if we make them, but the inefficiency of running locally cannot be.

I still prefer to run locally anyways, because fuck the kind of people who are trying to sell AI, but it is absolutely more inherently wasteful

A GPT-4 level language model and current Flux dev can easily run on a standard M3 MacBook Air via ggml.

My father in law told me how a guy at work created several pictures with AI for decorating the floor, bragging about saving costs since he didn’t use licensed pictures. But the AI may have used licensed pictures to learn creating those images. Artists lose money due to this being done by companies, which could very well afford paying the artists. I guess a private person creating memes with AI is not threatening anyone to lose their job.

Is there any free AI for personal use?

You can run Stable Diffusion locally on your PC.

I don’t know how to set that up, is there a guide?

You can try running Automatic 111 Webui(https://github.com/AUTOMATIC1111/stable-diffusion-webui )

That is a 404.

Now it works

Yes, many. It depends on what you are looking for (image generation, text generation, text to speech, speech to text, etc) and what hardware you have.

There is, and that’s typically less bad, but it still has ethical issues with how it was trained.

I was gonna go ahead and argue about this, but sadly I have been depicted as a soyjak. My lawyers told me that there is literally nothing I can do about this now

And that’s why you alway pack your Uno-Reverse-Card :P

Don’t worry, Mr. Mofu, I’ve got this argument covered for you. Ahem…

*always

NOOOOOOOOO

That’s great! These things are super fun. Just don’t call yourself an artist or try to copyright your generations. That’s like pretending to be a musician because you’re good at Guitar Hero.

In fairness, I had a college buddy who learned to play real drums by playing a lot of Rock Band. He was no Joey Jordison, but he wasn’t half bad.

Aw, that’s cute, a drummer thinks he’s a musician too? (I kid, that’s a running joke in music circles, percussionists are definitely musicians, we’d be lost without them). That’s awesome! I suppose expert drumming in Rock Band would be a lot like the real thing. A program like rock band would probably work as a great drum trainer on a real set.

Woah there, he didn’t say bassist

Lmao I was the bassist in our jam sessions, but I also play trumpet so that did spice things up a bit.

So did I, and I didn’t even know I could play until years later when I sat in front of a friend’s kit for a lesson with them. They basically talked me through the setup, gave me a song to play, and I just played the opening without much fuss. They told me I didn’t need the lesson, I could already play and I just needed time on the kit, left the room and let me go ham.

Honestly who cares about being an artist? There’s always going to be snobs trying to tear you down or devalue your efforts. No one questions whether video games are art or not now, but that took like twenty years since people began seriously pushing the subject. The same thing happened with synthesizers and samplers in the 1980s and as a result there are fewer working drummers today, but without these we would not have hip hop or house, and that would have been a huge cultural loss.

Generative art hasn’t found its Marley Marl or Frankie Knuckles yet, but they’re out there, and they’re going to do stuff that will blow our minds. They didn’t need to be artists to change the world.

Image generators don’t produce anything new, though. All they can do is iterate on previously sampled works which have been broken down into statistical arrays and then output based on the probability that best matches your prompt. They’re a fancier Gaussian Blur tool that can collage. To compare to your examples, they’re making songs that are nothing but samples from other music used without permission without a single original note in them, and companies are selling the tool for profit while the people using it are claiming that they wrote the music.

Also, people absolutely do still argue that video games aren’t art (and they’re stupid for it), and it takes tons of artists to make games. The first thing they teach you about 3d modeling is how to pick up a pencil and do life drawing and color theory.

The issue with generative AI isn’t the tech. Like your examples, the tech is just a tool. The issues are the wage theft and copyright violations of using other people’s work without permission and taking credit for their work as your own. You can’t remix a song and then claim it as your own original work because you remixed 5 songs into 1. And neither should a company be allowed to sell the sampler filled with music used without permission and make billions in profit doing so.

By the same logic, artists don’t produce anything new either.

All art is derivative. If an artist lived in a cave, they would not be able to draw a sunset.

Have you ever heard the saying that there are only 4 or 5 stories in the world? That’s basically what you’re arguing, and we’re getting into heavy philosophical areas here.

The difference is in the process. Anybody can take a photo, but it takes knowledge and experience to be a photographer. An artist understands concepts in the way that a physicist understands the rules that govern particles. The issue with AI isn’t that it’s derivative in the sense that “everything old is new again” or “nature doesn’t break her own laws,” it’s derivative in the sense that it merely regurgitates a collage of vectorized arrays of its training data. Even somebody who lives in a cave would understand how light falls and could extrapolate that knowledge to paint a sunset if you told them what a sunset is like. Given A and B, you can figure out C. The image generators we have today don’t understand how light works, even with all the images on the internet to examine. They can give you sets of A, B, and AB, but never C. If I draw a line and then tell you to draw a line, your line and my line will be different even though they’re both lines. If you tell an image generator to draw a line, it’ll spit out what is effectively a collage of lines from its training set.

And even this would only matter in terms of prompters saying that they are artists because they wrote the phrase that caused the tool to generate an image, but we live in a world where we must make money to live, and the way that the companies that make these tools work amounts to wage theft.

AI is like a camera. It’s a tool that will spawn entirely new genres of art and be used to improve the work of artists in many other areas. But like any other tool, it can be put together and used ethically or unethically, and that’s where the issues lie.

AI bros say that it’s like when the camera was first invented and all the painters freaked out. But that’s a strawman. Artists are asking, “Is a man not entitled to the sweat of his brow?”

Copyright is a whole mess and a dangerous can of worms, but before I get any further, I just want to quote a funny meme: “I’m not doing homework for you. I’ve known you for 30 seconds and enjoyed none of them.” If you’re going to make a point, give the actual point before citing sources because there’s no guarantee that the person you’re talking to will even understand what you’re trying to say.

Having said that, I agree that anything around copyright and AI is a dangerous road. Copyright is extremely flawed in its design.

I compare image generators to the Gaussian Blur tool for a reason - it’s a tool that outputs an algorithm based on its inputs. Your prompt and its training set, in this case. And like any other tool, its work on its own is derivative of all the works in its training set and therefore the burning question comes down to whether or not that training data was ethically sourced, ie used with permission. So the question comes down to whether or not the companies behind the tool had the right to use the images that they did and how to prove that. I’m a fan of requiring generators to list the works that they used for their training data somewhere. Basically, a similar licensing system as open source software. This way, people could openly license their work for use or not and have a way to prove if their works were used without their permission legally. There are some companies that are actually moving to commissioning artists to create works specifically for use in their training sets, and I think that’s great.

AI is a tool like any other, and like any other tool, it can be made using unethical means. In an ideal world, it wouldn’t matter because artists wouldn’t have to worry about putting food on the table and would be able to just make art for the sake of following their passions. But we don’t live in an ideal world, and the generators we have today are equivalent to the fast fashion industry.

Basically, I ask, “Is a man not entitled to the sweat of his brow?” And the AI companies of today respond, “No! It belongs to me.”

There’s a whole other discussion to be had about prompters and the attitude that they created the works generated by these tools and how similar they are to corporate middle managers taking credit for the work of the people under them, but that’s a discussion for another time.

Works should not have to be licensed for analysis, and Cory Doctorow very eloquently explains why in this article. I’ll quote a small part, but I implore you to read the whole thing.

This open letter by Katherine Klosek, the director of information policy and federal relations at the Association of Research Libraries, further expands on the pitfalls of this kind of thinking and the implications for broader society. I know it’s a lot, but these are wonderfully condensed explanations of the deeper issues at hand.

Calling yourself a chef because you typed in what you wanted on a food delivery app.

If only there was a way to make funny pictures without AI…

The problem with Generative Neural Networks is not generally the people using them so much as the people who are creating them for profit using unethical methods.

As far as I’m concerned, if you’re using AI it’s no worse than grabbing a random image from the internet, which is a common and accepted practice for many situations that don’t involve a profit motive.

The “profit motive” is just the tip of the iceberg.

I’ve seen people stopping looking for random images from the web to grab them, and instead going full AI. With reverse image searches, it even doubled as an advertisement, nowadays you’re getting even less of that.

I’ve seen people stopping looking for random images from the web to grab them, and instead going full AI.

Okay. What’s your point?

Nice Strawman you got there.

deleted by creator

Another big argument is the large resource and environmental cost of AI. I’d rather laugh at a shitty photoshop or ms paint meme (like this one) than a funny image created in some water-hogging energy-guzzling server warehouse.

You’re confusing LLMs with other AI models, as LLMs are magnitudes more energy demanding than other AI. It’s easy to see why if you’ve ever looked at self hosting AI, you need a cluster of top line business GPUs to run modern LLMs while an image generator can be run on most consumer 3000, 4000 series Nvidia GPUs at home. Generating images is about as costly as playing a modern video game, and only when it’s generating.

I’ve gotten arguments that it’s theft, because technically the AI is utilizing other artist’s work as resources for the images it produces. I’ve pointed out that that’s more like copying another artist’s style than theft, which real artists do all the time, but it’s apparently different when a computer algorithm does it?

Look, I understand people’s fears that AI image generation is going to put regular artists out of work, I just don’t agree with them. Did photography put painters out of work? Did the printing press stop the use of writing utensils? Did cinema cause theatre to go extinct?

No. People need to calm down and stop freaking out about technology moving forward. You’re not going to stop it; so you might as well learn to live with it. If history is a reliable teacher, it really won’t be that bad.

Well said.

I’d like to add that the biggest problem, imo, is the closed source nature of the models. Corporations who used our collective knowledge, without permission, to create AI to sell back to us is unethical at best. All AI models should be open source for public access, sort of like libraries. Corpos are thrilled we’re fighting about copyright pennies instead, I’m sure.

Except it isn’t copying a style. It’s taking the actual images and turning them into statistical arrays and then combining them into an algorithmic output based on your prompt. It’s basically a pixel by pixel collage of thousands of pictures. Copying a style implies an understanding of the artistic intent behind that style. The why and how the artist does what they do. Image generators can do that exactly as well as the Gaussian Blur tool can.

The difference between the two is that you can understand why an artist made a line and copy that intent, but you’ll never make exactly the same line. You’re not copying and pasting that one line into your own work, while that’s exactly what the generator is doing. It just doesn’t look like it because it’s buried under hundreds of other lines taken from hundreds of other images (sometimes - sometimes it just gives you straight-up Darth Vader in the image).

It’s taking the actual images and turning them into statistical arrays and then combining them into an algorithmic output based on your prompt.

So looking at images to make a generalised understanding of them, and then reproduce based upon additional information isn’t exactly what our brain does to copy someones style?

You are arguing against your own point here. You don’t need to “understand the artistic intent” to copy. Most artists don’t.

and just about any artist can draw Darth Vader as well, almost all non “ethics” or intent based argument can be applied to artists or sufficiently convoluted machine models.

But just about any artist isn’t reproducing a still from The Mandalorian in the middle of a picture like right-clicking and hitting “save as” on a picture you got from a Google search. Which these generators have done multiple times. A “sufficiently convoluted machine model” would be a senient machine. At the level required for what you’re talking about, we’re getting into the philosophical area of what it means to be a sentient being, which is so far removed from these generators as to be irrelevant to the point. And at that point, you’re not creating anything anyway. You’ve hired a machine to create for you.

These models are tools that use an algorithm to collage pre-existing works into a derivative work. They can not create. If you tell a generator to draw a cat, but it hasn’t any pictures of cats in its data set, you won’t get anything. If you feed AI images back into these generators, they quickly degrade into garbage. Because they don’t have a concept of anything. They don’t understand color theory or two point perspective or anything. They simply are programmed to output their collection of vectorized arrays in an algorithmic format based upon certain keywords.

Why are you attaching all these convoluted characteristics to art? Is it because you are otherwise unable to claim computer art isn’t art?

Art does not need to have intent. It doesn’t need philosophy. It doesn’t need to be made by a sentient being. It doesn’t need to be 100% original, because no art ever is. So what if a computer created it?

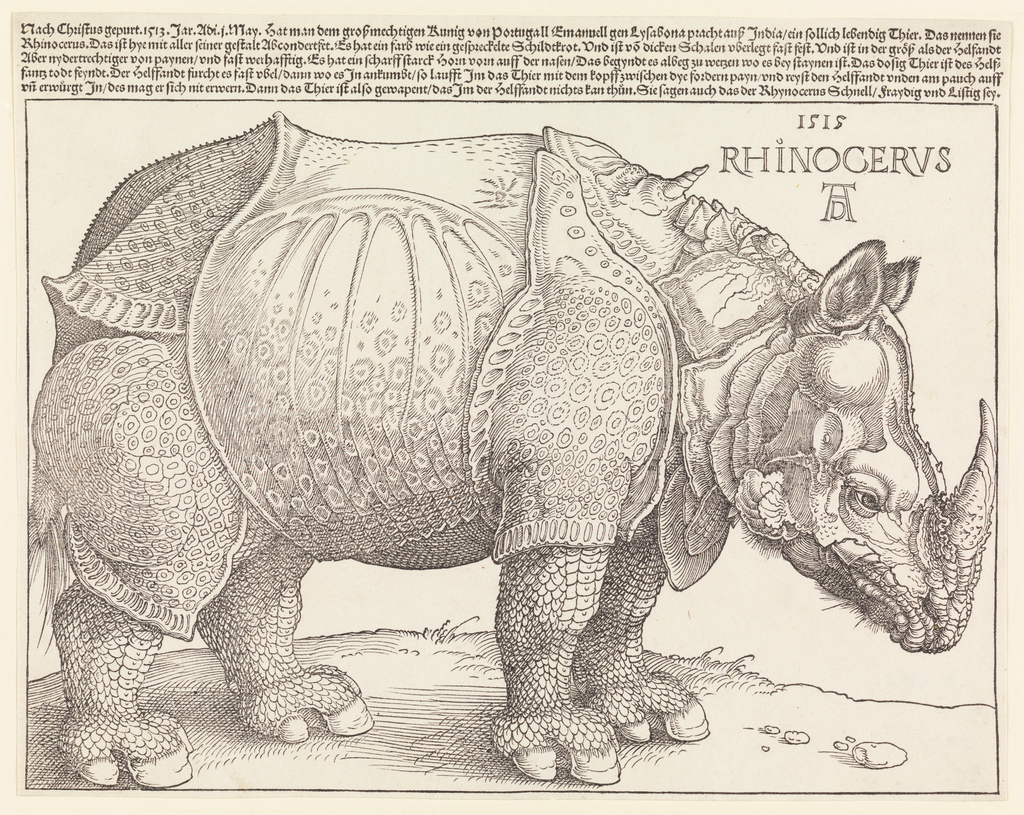

If you encounter an artist who never saw a cat, they would also not be able to paint it. Just look at these medieval depictions of lions where it is clear the artist never saw one.

Also, Dürer’s Rhino.

But he tried. That’s the important thing.

Wee! Haha! Fun!!

sounds of a dozen methane gas generators humming away

This happens a lot in music. It’s okay to listen to music that serves other purposes than art. Gatekeeping is ridiculous.

I’m a musician. I play more instruments than you can even name correctly. I can make a tritonus substitution without you even noticing. I don’t give a shit if German Schlager Music is worse than country. If I want to watch Eurovision and enjoy myself and pay to vote for songs in foreign languages, I will do so.

You cannot stop me from enjoying stupid music.

Yup. There was a commercial I saw for like, Amazon or something that had the Canon in D mixed with some more modern vocals. While trying to find it (because I liked it) someone on Reddit was bitching about how Pachelbel never meant his work to be used that way or something and that if you like it you don’t know good music.

Bitch, I sing with a symphony orchestra regularly and have done so for 15 years. I’ve played instruments my whole life. Don’t gatekeep music.

The issue has never been the tech itself. Image generators are basically just a more complicated Gaussian Blur tool.

The issue is, and always has been, the ethics involved in the creation of the tools. The companies steal the work they use to train these models without paying the artists for their efforts (wage theft). They’ve outright said that they couldn’t afford to make these tools if they had to pay copyright fees for the images that they scrape from the internet. They replace jobs with AI tools that aren’t fit for the task because it’s cheaper to fire people. They train these models on the works of those employees. When you pay for a subscription to these things, you’re paying a corporation to do all the things we hate about late stage capitalism.

I think that, in many ways AI is just worsening the problems of excessive copyright terms. Copyright should last 20 years, maybe 40 if it can be proven that it is actively in use.

Copyright is its own whole can of worms that could have entire essays just about how it and AI cause problems. But the issue at hand really comes down to one simple question:

Is a man not entitled to the sweat of his brow?

“No!” Says society. “It’s not worth anything.”

“No!” Says the prompter. “It belongs to the people.”

“No!” Says the corporation. “It belongs to me.”

I think you are making the mistake of assuming disagreement with your stance means someone would say no to these questions. Simply put - it’s a strawman.

Most (yes, even corporations, albeit much less so for the larger ones), would say “Yes” to this question on it’s face value, because they would want the same for their own “sweat of the brow”. But certain uses after the work is created no longer have a definitive “Yes” to their answer, which is why your ‘simple question’ is not an accurate representation, as it forms no distinctions between that. You cannot stop your publicly posted work from being analyzed, by human or computer. This is firmly established. As others have put in this thread, reducing protections over analysis will be detrimental to both artists as well as everyone else. It would quite literally cause society’s ability to advance to slow down if not halt completely as most research requires analysis of existing data, and most of that is computer assisted.

Artists have always been undervalued, I will give you that. But to mitigate that, we should provide artists better protections that don’t rely on breaking down other freedoms. For example, UBI. And I wish people that were against AI would focus on that, since that is actually something you could get agreement on with most of society and actually help artists with. Fighting against technology that besides it negatives also provides great positives is a losing battle.

It’s not about “analysis” but about for-profit use. Public domain still falls under Fair Use. I think you’re being too optimistic about support for UBI, but I absolutely agree on that point. There are countries that believe UBI will be necessary in a decades time due to more and more of the population becoming permanently unemployed by jobs being replaced. I say myself that I don’t think anybody would really care if their livelihoods weren’t at stake (except for dealing with the people who look down on artists and say that writing prompts makes them just as good as if not better than artists). As it stands, artists are already forming their own walled off communities to isolate their work from being publicly available and creating software to poison LLMs. So either art becomes largely inaccessible to the public, or some form of horrible copyright action is taken because those are the only options available to artists.

Ultimately, I’d like a licensing system put in place, like for open source software where people can license their works and companies have to cite their sources for their training data. Academics have to cite their sources for research, and holding for-profit companies to the same standards seems like it would be a step in the right direction. Simply require your data scraper to keep track of where it got its data from in a publicly available list. That way, if they’ve used stuff that they legally shouldn’t, it can be proven.

If you think I’m being optimistic about UBI, I can only question how optimistic you are about your own position receiving wide spread support. So far not even most artists stand behind anti AI standpoints, just a very vocal minority and their supporters who even threaten and bully other artists that don’t support their views.

It’s not about “analysis” but about for-profit use. Public domain still falls under Fair Use.

I really don’t know what you’re trying to say here. Public domain is free of any copyright, so you don’t need a fair use exemption to use it at all. And for-profit use is not a factor for whether analysis is allowed or not. And if it was, again, it would stagnate the ability for society to invent and advance, since most frequent use is for profit. But even if it wasn’t, one company can produce the dataset or the model as a non-profit, and the other company could use that for profit. It doesn’t hold up.

As it stands, artists are already forming their own walled off communities to isolate their work from being publicly available

If you want to avoid being trained on by AI, that’s a pretty good way to do it yes. It can also be combined with payment. So if that helps artists, I’m all for it. But I have yet to hear any of that from the artists I know, nor seen a single practical example of it that wasn’t already explicitly private (eg. commissions or a patreon). Most artists make their work to be seen, and that has always meant accepting that someone might take your work and be inspired by it. My ideas have been stolen blatantly, and I cannot do a thing about it. That is the compromise we make between creative freedom and ownership, since the alternative would be disastrous. Even if people pay for access, once they’ve done so they can still analyze and learn from it. But yes, if you don’t want your ideas to be copied, never sharing it is a sure way to do that, but that is antithetical to why most people make art to begin with.

creating software to poison LLMs.

These tools are horribly ineffective though. They waste artists time and/or degrade the artwork to the point humans don’t enjoy it either. It’s an artists right to use it though, but it’s essentially snake oil that plays on these artists fears of AI. But that’s a whole other discussion.

So either art becomes largely inaccessible to the public, or some form of horrible copyright action is taken because those are the only options available to artists.

I really think you are being unrealistic and hyperbolic here. Neither of these have happened nor have much of chance of happening. There are billions of people producing works that could be considered art and with making art comes the desire to share it. Sure there might only be millions that make great art, but if they would mobilize together that would be world news, if a workers strike in Hollywood can do that for a significantly smaller amount of artists.

Ultimately, I’d like a licensing system put in place Academics have to cite their sources for research That way, if they’ve used stuff that they legally shouldn’t, it can be proven.

The reason we have sources in research is not for licensing purposes. It is to support legitimacy, to build upon the work of the other. I wouldn’t be against sourcing, but it is a moot point because companies that make AI models don’t typically throw their dataset out there. So these datasets might very well be sourced. One well known public dataset LAION 5b, does source URLs. But again, because analysis can be performed freely, this is not a requirement.

Creating a requirement to license data for analysis is what you are arguing here for. I can already hear every large corporation salivating in the back at the idea of that. Every creator in existence would have to pay license to some big company because they interacted with their works at some point in their life and something they made looked somewhat similar. And copyright is already far more of a tool for big corporations, not small creators. This is a dystopian future to desire.

We’re already living in a dystopia. Companies are selling your work to be used in training sets already. Every social media company that I’m aware of has already tried it at least once, and most are actively doing it. Though that’s not why we live in a dystopia, it’s just one more piece on the pile.

When I say licensing, I’m not talking about licensing fees like social media companies are already taking in, I’m talking about open source software style licensing - groups of predefined rules that artists can apply to their work that AI companies must abide by if they want to use their work. Under these licensing rules are everything from “do whatever you want with my code” to “my code can only be used to make not-for-profit software,” and all derivative works have the same license applied to them. Obviously, the closed source alternative doesn’t apply here - the d’jinn’s already out of the bottle and as you said, once your work is out there, there’s always the risk somebody is going to steal it.

I’m not against AI, I’m simply against corporations being left unregulated to do whatever the hell they want. That’s one of the reasons to make the distinction between people taking inspiration from a work and a LLM being trained off of analysing that work as part of its data set. Profit-motivation is largely antithetical to progress. Companies hate taking risks. Even at the height of corporate research spending, the so-called Blue Skies Research, the majority of research funding was done by the government. Today, medical research is done at colleges and universities on government dollars, with companies coming in afterward to patent a product out of the research when there is no longer any risk. This is how AI companies currently work. Letting people like you and me do all the work and then swooping in to take that and turn it into a multi-billion dollar profit. The work that made the COVID vaccines possible was done decades before, but no company could figure out how to make a profit off of it until COVID happened, so nothing was ever done with it.

As for walled off communities of artists, you should check out Cara, a new social media platform that’s a mix of Artstation and Instagam and 100% anti-AI. I forget the details, but AI art is banned on the site, and I believe they have Nightshade or something built in. I believe that when it was first announced, they had something like 200,000 people create accounts in the first 3 months.

People aren’t anti-AI. They’re anti late-stage capitalism. And with what little power they have, they’d rather poison the well or watch it all burn than be trampled on any further.

You don’t solve a dystopia by adding more dystopian elements. Yes, some companies are scum and they should be rightfully targeted and taken down. But the way you do that is by targeting those scummy companies specifically, and creatives aren’t the only industry suffering from them. There are broad spectrum legislatures to do so, such as income based equality (proportional taxing and fining), or further regulations. But you don’t do that by changing fundamental rights every artists so far has enjoyed to learn their craft, but also made society what it is today. Your idea would KILL any scientific progress because all of it depends on either for profit businesses (Not per se the scummy ones) and the freedom to analyze works without a license (Something you seem to want to get rid of), in which the vast majority is computer driven. You are arguing in favor of taking a shot to the foot if it means “owning the

libsbig companies” when there are clearly better solutions, and guess what, we already have pretty bad luck getting those things passed as is.And you think most artists and creatives don’t see this? Most of us are honest about the fact of how we got to where we are, because we’ve learned how to create and grow our skill set this same way. By consuming (and so, analyzing) a lot of media, and looking a whole lot at other people making things. There’s a reason “good artists copy, great artists steal” is such a known line, and I’d argue against it because I feel it frames even something like taking inspiration as theft, but it’s the same argument people are making in reverse for AI.

But this whole conversation shouldn’t be about the big companies, but about the small ones. If you’re not in the industry you might just not know that AI is everywhere in small companies too. And they’re not using the big companies if they can help it. There’s open source AI that’s free to download and use, that holds true to open information that everyone can benefit from. By pretending they don’t exist and proposing an unreasonable ban on the means, denies those without the capital and ability to build their own (licensed) datasets in the future, while those with the means have no problem and can even leverage their own licenses far more efficiently than any small company or individuals could. And if AI does get too good to ignore, there will be the artists that learned how to use AI, forced to work for corporations, and the ones that don’t and can’t compete. So far it’s only been optional since using AI well is actually quite hard, and only dumb CEOs would put any trust in it replacing a human. But it will speed up your workflow, and make certain tasks faster, but it doesn’t replace it in large pieces unless you’re really just making the most generic stuff ever for a living, like marketing material.

Never heard of Cara. I don’t doubt it exists somewhere, but I’m wholly uninterested in it or putting any work I make there. I will fight tooth and nail for what I made to be mine and allowing me to profit off it, but I’m not going to argue and promote for taking away the freedom that allowed me to become who I am from others, and the freedom of people to make art in any way they like. The freedom of expression is sacred to me. I will support other more broad appealing and far more likely to succeed alternatives that will put these companies in their place, and anything sensible that doesn’t also cause casualties elsewhere. But I’m not going to be in favor of being the “freedom of expression police” against my colleagues, and friends, or anyone for that matter, on what tools they can or cannot not use to funnel their creativity into. This is a downright insidious mentality in my eyes, and so far most people I’ve had a good talk about AI with have shared that distaste, while agreeing to it being abused by big companies.

Again, they can use whatever they want, but Nightshade (And Glaze) are not proven to be effective, in case you didn’t know. They rely on misunderstandings, and hypothetically only work under extremely favorable situations, and assume the people collecting the dataset are really, really dumb. That’s why I call it snake oil. It’s not just me saying exactly this.

Agreed. The problem is that so many (including in this thread) argue that training AI models is no different than training humans—that a human brain inspired by what it sees is functionally the same thing.

My response to why there is still an ethical difference revolves around two arguments: scale, and profession.

Scale: AI models’ sheer image output makes them a threat to artists where other human artists are not. One artist clearly profiting off another’s style can still be inspiration, and even part of the former’s path toward their own style; however, the functional equivalent of ten thousand artists doing the same is something else entirely. The art is produced at a scale that could drown out the original artist’s work, without which such image generation wouldn’t be possible in the first place.

Profession. Those profiting from AI art, which relies on unpaid scraping of artists’s work for data sets, are not themselves artists. They are programmers, engineers, and the CEOs and stakeholders who can even afford the ridiculous capital necessary in the first place to utilize this technology at scale. The idea that this is just a “continuation of the chain of inspiration from which all artists benefit” is nonsense.

As the popular adage goes nowadays, “AI models allow wealth to access skill while forbidding skill to access wealth.”

Oooo an AI straw man

We talk about freedom the same way we talk about art,” she said, to whoever was listening. “Like it is a statement of quality rather than a description. Art doesn’t mean good or bad. Art only means art. It can be terrible and still be art. Freedom can be good or bad too. There can be terrible freedom.

Joseph Fink, Alice Isn’t Dead

But does Art don’t need the intention to create it, or at least to declare it as Art? For example, the Meme I made, would it be considered Art even if it was not my intention to create art?

( Okay this is less about AI, more about philosophy at this point)

I think art is as much in the eye of the viewer as it is the maker. You’ll never convince me that Jackson Pollock was an artist, I simply don’t see the art in his work, but you may have a life changing emotional experience viewing it. My opinion doesn’t devalue your experience any more than your experience devalues mine.

Art doesn’t need the intention to create art in order to be art. Everything is “Art.” From the beauty of the Empire State Building to the most mundane office building, all buildings fall under the category of art known as architecture. The same way that McDonalds technically falls under the category of the culinary arts.

Your argument that image generators are okay because you don’t intend to make art is like arguing that you don’t want to wear fashion and then you buy your clothes on Temu. From the most ridiculous runway outfit to that t-shirt you got at Walmart, all clothes are fashion, but that’s not the issue. The issue would be that you bought fast fashion - an industry built entirely on horrible working conditions and poor wages that is an ecological nightmare. And this is the issue with these generators: they sell you a product made using stolen work (wage theft basically) that uses more electricity than every renewable energy resource on the planet.

The issue isn’t the tech. It’s the companies making the tech and the ethics involved. Though there’s an entire other discussion to be had about the people who call themselves artists because they generate images, but that’s not relevant here.

Depends entirely on your definition of art.

To me art is playing with your senses. A painting plays with your sight. Music plays with your hearing. Statues play with your touch. Dancing plays with your sense of balance and proprioception. …

So anything that does that, like a nice sunset, is art to me.

I agree.

I have all these images in my head and zero artistic skills to create them.

Thanks AI, if indeed that is your real name, for helping me with Visual aids for my teaching work!

I honestly think it’s pretty weird that people don’t like AI art memes.

That’s its best case use, guys. Making a computer burn down an acre of Amazon to make a picture of Trump worshipping Putin’s cock.

Yes, a real artist could waste their skill doing it, but why tho?

Using it for stupid shit is fine, especially if it fucks with the AI by making it turn out even more weird shit.