Cloudflare uses a wall of lava lamps for some of their RNG needs

deleted by creator

To collect this data, Cloudflare has arranged about 100 lava lamps on one of the walls in the lobby of the Cloudflare headquarters and mounted a camera pointing at the lamps. The camera takes photos of the lamps at regular intervals and sends the images to Cloudflare servers. All digital images are really stored by computers as a series of numbers, with each pixel having its own numerical value, and so each image becomes a string of totally random numbers that the Cloudflare servers can then use as a starting point for creating secure encryption keys.

I’m sure they’re smarter than me but my novice cryptographer brain doesn’t understand this.

Isn’t the string of numbers representing each pixel rather limited Aren’t all those sections of the image that have lava lamps limited to values somewhere in the reddish/blueish spectrum? Isn’t the gray background very non-random?

Apparently the camera is accessible in the lobby. They say people walking in front of the camera adds randomness. If I go there and hold a photo in front of the camera which I know the values of, doesn’t that compromise everything?

To be fair their site says they take the lava lamp output and combine it with entropy from two servers and probably do a lot of other stuff before actually getting random numbers. But I don’t get how the lava lamps photo setup is even close to being random

tried and failed to add image, so here a link: https://www.cloudflare.com/learning/ssl/lava-lamp-encryption/

If you hash the image with a strong algo, even a single different pixel should end up in a wildly different result.

But different doesn’t mean random. An attacker could test every possibility for that pixel rather quickly. Even faster if you know a bunch of the pixels are, for example, a shade of gray.

I found an explanation where they bring up the issues I brought up as well as some others.

https://blog.cloudflare.com/lavarand-in-production-the-nitty-gritty-technical-details/

They give an example with one red value for a single pixel. They don’t address my point that there are a lot of pixels like that one, maybe not all would be 50/50 like their example but a lot of pixels would have a much narrower range of values than randomness.

But they answer my second point about a hacker putting something in front of the camera with known values and that answer sort of takes care of everything. It boils down to, it doesn’t matter because the lava lamp wall output is mixed with other sources that an attacker doesn’t have access to.

Surely that can’t be uniform random though

But they’re just using it for a seed, so the output would be impossible to predict, but it feels like a checksum or something would approach a Gaussian distribution (the more numbers you add up, the more Gaussian it would be, since we know an image will have a mean and finite variance).

There are ways to get entropy out of non-uniform data in order to approach if not reach a uniform distribution.

A naïve, but surprisingly effective way to do this would be to put the data through a hashing algorithm of some sort.

Good hashing algorithms are specifically designed to make similar but non-identical inputs hash to values that appear unrelated.

Depending on the data source, there may be more efficient ways of getting an unpredictable sequence of bits out of it. e.g. for image data, an image difference from an average image may be more appealing than using the plain image, but I’m not sure whether that’s legitimately “more random” or whether it just feels that way.

deleted by creator

Math! Also, noise!

There are algorithms (a set of math steps) that make pseudo-random numbers. These usually involve large prime numbers, because those usually generate fewer repeating patterns.

A truly random number generator is similar to rolling dice: you use some source of randomness and convert it to a number. All electric circuits produce “noise” (which is often received radio waves and such that interfere with the circuits). Think of tuning a radio to a channel with nothing on it–you get “white noise”, which can be a good source of random information. Then all you need to do is convert that to a range of numbers, and you’re good to go.

These are fairly simplified explanations, so take them with a grain of salt, but they give the general idea.

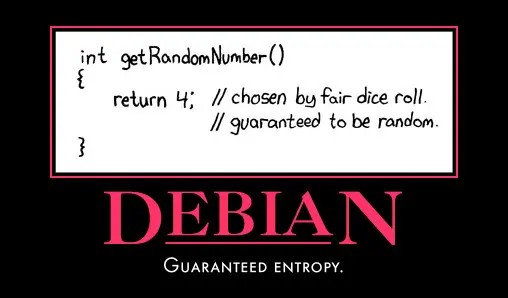

Why Debian?

Skill issue

All random, all thuh time

I see a lot of good answers here but let’s try it from another angle.

How do we get randomness from a function or formula?

For starters let’s setup a few simple rules.

Every time our random function is called we’ll

- Take the last output from a variable we call LAST_RESULT

- If there’s no value in LAST_RESULT we’ll assume the value is 1

- We run a set of calculations storing the value in a variable we call X

- We store the result of these calculations in LAST_RESULT

- We return this new “random” number.

So let’s call it.

> Random() Since LAST_RESULT is undefined SET LAST_RESULT to the value of 1 Set X to the result of this calculation (LAST_RESULT+1) * 3X is now 6

Set X to the result of this calculation (X + 7) / 2X is now 7

Set X to the result of this calculation (rounding to the nearest whole number) X/LAST_RESULTX is now 7

Set LAST_RESULT to the value of XLAST_RESULT is now 7

Return the value of X as the resultResult is 7

Ok. So let’s call it again

> Random() Set X to the result of this calculation (LAST_RESULT+1) * 3X is now 24

Set X to the result of this calculation (X + 7) / 2X is now 16

Set X to the result of this calculation (rounding to the nearest whole number) X/LAST_RESULTX is now 2

Set LAST_RESULT to the value of XLAST_RESULT is now 2

Return the value of X as the resultResult is 2

And if we call it again we get seemingly random results

Random() Result is 4

Random() Result is 3

But the next time you run it you’ll get the same results in the same order. 7, then 2 then 4 then 3

So what you need is something to “seed” the random number calculation.

Something like

SetRandomSeed Set LAST_RESULT to the current second of the day

Then when you call Random after this it starts with that as the prior results and gives seemingly random results.

Of course my calculations are rough and probably fail/repeat after so many calls but it gives you an idea of how this works.

So the trick is to get noise for the seed. That could be the number of non leap seconds since 00:00:00 UTC on Thursday, 1 January 1970 (Unix epoch)

Or the temperature reading of a CPU chip.

Maybe it’s the ratio of red vs yellow from a camera feed looking at lava lamps.

Or the current users average typing speed.

An additional note. Many of those would not be “cryptographically” secure for encryption because they can easily be determined by a third party. We all experience the same “Unix epoch” within a few milliseconds if our system clocks are properly set for example. Or monitored from afar and reproduced (hacked webcam shows they had just typed the following letters in the previous 27 seconds that we know the “algorithm” uses, etc.

Psudo random numbers come from a special set of mathematical equations which act as the basis for natural processes. These are known as nonlinear dynamic equations.

Their outputs feed back into their inputs. They show areas of high initial sensitivity where any tiny change in input totally changes the output over time. Finally, they often show areas of different cycling behavior. The branch of math which studies them is holomorphic dynamics.

The psudo-randomness of slightly different seed values generating wildly different outputs has to do sensitivity to initial conditions. This is a property of the paramater space structures in which those random number sequences cycles through. The ‘path’ of numbers that will be cycled through is determined by starting position and the geometric topology in the complex plane which the equation generates.

By graphing and iterating psudo random equations in the conplex plane, it generates infinitely complex geometric structures called julia sets which govern how algebraic numbers cycle through pseudorandom walks depending on initial seed values and equation used. These julia sets often are fractals with infinite complexity at its borders at all scales of precision.

Julia sets have a “stuff goes everywhere” property which is the the real magic of where sensitivity to initial conditions comes from. But now were getting deep into the weeds of math nerd territory.

Simply put, you put a random number in and it spits a more-or-less random number out, thanks to wierd properties that the higher dimensional fractal hyper structures generated by the equation in the complex plane have. Those lower dimensional random number cycles are embedded into the julia set structurally.

A big issue with psudo randoms is they will always give the same series numbers if you begin the equation with the same computationally finite seed values. You could the generated sequence of numbers to work back and find the seed values and equation used to generate them. This is a serious security concern when using them for cryptography. The amount of computational work it takes to work back is massive but its doable with modern quantum super computers.

The mechanics of pseudo random numbers comes from statistical combinatorics, nonlinear algebra,fractals, chaos theory, and sensitivity to initial conditions.

True random numbers come from directly measuring physical phenomenon with sufficient randomness in their mechanics.

Things like the decay of a radioactive isotope or lava lamp turbulence have built in randomness. There is no seed or way to generate the same sequence of motions or predicting when isotopes decay.

Turbulance for example has fractal properties in its energy distribution as well as random brownian motion adding up on the atomic scale. Radioactive half life has uncertainty principal built into it. These universal operations have true uncalculatable randomness thanks to entropy, the uncertainty principle, fractals, brownian motion, chaos theory, and sensitivitiy to initial conditions.

The physical universe is the most powerful computer there will ever be. It calculates with infinite decimal precision in its mixed mathematical, statistical, and physical operations. It uses real trancendental like pi numbers with infinite non-repeating decimals, and does its calculations at the speed of causality/light.

Our best super computers will never be infinitely powerful. Our numbers need to be finite and computable to work with them and understand them. The universe could not care less if its values are finitely computable or usable for human work.

So theres fundamental limits to how random we can get through artificial computer algorithm generation using computable numbers. True randomness through physical processes leverages the universes in built infinite precision and mechanical algorithms as a black box and just measures the output result.

From my opinion it is more computer science sorcery than math sorcery.

For true random generation you usually need some specialized hardware for it, that uses sone natural source of random. One could use the decay of a radioactive material as such a source or the noise one can get from audio input. Unfortunately, I don’t know what actual hardware uses.

For pseudo random generation, you usually use a seed (ideally a true random value or something with a high entropy) which you feed into an algorithm like Linear Congruental Generator (LCG) or Mersenne Twister (there are lots of algorithms).

One further important note: Tge use case forvwhich you need random numbers is important. A video game could accept a random number generator with “lower” quality while a cryptographic algorithm always needs a cryptographic secure random number generator (don’t forget: “don’t roll your own crypto”).

Finally there are quasi randim number generators, however this name is very misleading. The mathematical correct term is low discrepancy sequence. There are not random at all but can be used and have useful properties in some settungs where pseudo random number generators can be used. Never in a cryptographic algorithm, though.

An interesting source of randomness is using a diode “in reverse”. Randomly, a few electrons pass through, which can be amplified and measured. One uses a 2^n number of such constructs and XORs the results to get a random bit.

Great write up, now I have to google what a Meraenne Twister is. To use audio input noise as a random number gen I would just hook it up to a pressision digital db meter but I’m guessing the software implementation is a little more practical.

A software solution usually can create “random” faster, with the drawback that its not actual random

The Mersenne Twister was a famous pseudo random number generator when I wrote my diploma thesis in 2009. Today, afaik, PCG (Permuted Congrentual Generator) are better.

deleted by creator

Another tidbit: Operating systems (like Linux) usually provide a possibility to get entropy (ideally used as seed). Linux for example has /dev/urandom beyond others. Afaik, it uses the time between subsequent accesses to the hdd as one of the sources used to create the entropy.

They get some dude hammered drunk, then stand him by a pole and ask him to take 100 steps.

I love the idea of millions of drunk dudes by poles being employed just for random number generation

Might be the only job that’s left after StarNet takes over.

You can use physical objects like dice or lava lamps that will naturally form random distribution when we check. But Newton and others would argue that even this was a determinant problem and if you had perfect knowledge of the dice and a good physics theory, you could predict the outcome.

We can only recognize randomness by the patterns it leaves behind.

The philosophical truth is that we don’t know if “randomness” is an actual phenomena or just a bucket where we put outcomes we haven’t learned to predict yet. A sort of randomness of the gap. Some have suggested that as a pattern-recognizing machine, the human mind simply can’t conceive randomness. Even the way “randomness” is verified is by looking at the distribution in the outcome and see if it matches the pattern we expect.

The notion that our universe is perfectly causal to the point that you can predict exactly when and where that specific atom will decay is pretty much bunked at this point. Not that living in a probabilistic, quantum physics universe is any fucking easier to comprehend but them’s be the cards we were dealt.

How was it debunked?

I would say “debunked” in the sense that quantum mechanics correctly predicts phenomena that don’t exist in classical physics, and relies on the idea that quantum particles obey a probability distribution, rather than deterministic mechanics.

Quantum mechanics appears to work so well for these phenomena compared to deterministic mechanics that it’s tempting to say that the actual universe is in fact governed by probabilities rather than determinism.

I would argue that all physical models of the universe are just that: Models. We can get asymptotically closer to a perfect description of the universe, but no model can ever tell us the true nature of the underlying system it is describing, just be an arbitrarily good description of it.

I’d say it actually goes further. We have plenty of evidence leading to the realization of fact that simply measuring a phenomenon changes the phenomenon. From a quantum mechanics perspective we say things like “measuring the phenomenon collapses its wave function to a single state.”

When a quantum system is measured, its wave function, which represents a superposition of multiple potential outcomes, collapses to a single definite state corresponding to the result of the measurement.

All macroscopic phenomena comprise nanoscopic quantum phenomena.

Super fucking weird to think about. The classic undergrad physics experiment is the double-slit experiment— particles like electrons create an interference pattern when unobserved, acting like waves and passing through both slits at once. However, when we measure which slit a particle goes through, this wave-like behavior disappears, and the particle behaves as if it went through only one slit. This shows that measurement collapses the particle’s wave function from multiple possibilities into a single, definite state.

Similarly, despite being depicted as such in early exposures to chemistry, electrons don’t “orbit” the nucleus like planets do their stars—rather they have regions around the nucleus in which they are more probably found. These misleadingly named “orbitals” vary in shape.

Finally, we have the Heisenberg Uncertainty Principle; which states that we can measure either a particle’s speed (kinetic energy) or its location, but not both, because the act of measuring (observing) that particle irrevocably changes it.

Here’s a macroscopic example of how measuring/observing things changes the thing. When you measure the temperature of an object using a thermometer, the object is either transmitting or receiving thermal energy to/from the thermometer, because the thermometer needs to be in contact and thermal equilibrium with the object. The object’s total energy level has now changed—even if it’s a trivial change it’s also non-zero. Measuring/observing the object in this way has changed it.

omg it goes deeper. I love physics. Classical mechanics models work well when we want to explain and predict macroscopic and limited chain-of-events phenomena. We can predict with high confidence that a 2000 kg car traveling at 100 km/h will impulse this much force and energy to a stationary object when they collide, assuming a perfectly inelastic collision, spherical cows, etc. We can’t model with any confidence with any classical model how the displaced air molecules from this collision in Nuremberg, Germany will create tornadoes in six months in Wichita, Kansas, USA. That’s the butterfly effect.

Ultimately, this interplay between measurement and outcome highlights a fundamental truth in both quantum mechanics and chaos theory: the universe is inherently unpredictable at every scale. Just as the behavior of subatomic particles is influenced by the act of observation, the butterfly effect shows us that small changes can lead to significant consequences in complex systems. This intertwining of uncertainty and complexity underscores the limitations of our predictive models, whether they pertain to the quantum realm or the macroscopic world.

Nice try skynet

1000111000111100001100101010101001111111010010

All you need is a bunch of lava lamps and a camera.

Mathematically? True random numbers cannot be.

Electronically?

Either listening to environmental noise (human input, but mostly these things called ring oscillators that are basically chains of not gates and the initial state combined with noise, temperature and process variation).

The real magic is taking some noise source and hiding most of it (think modulo operation or similar) so people see large variations without being able to sample enough of it to find patterns, ie if the source is thermal variance, it might have a sine wave effect but you take the lowest significant bits only, and hide the biggest bits so they can’t easily model the pattern.

There’s more, lot of finite field math and transforms, whitening functions, etc.

I once read that pokerstars uses the cosmic background noise to shuffle cards (sorting cards might be a more apparent term here.

PRNGs aren’t random at all; they produce a deterministic sequence of numbers based on a seed value and an internal counter. Two PRNGs using the same algorithm and seed will produce the same sequence of numbers. The sequence is difficult to predict without knowing the algorithm and seed, and the values are close to evenly-distributed, which is enough like random numbers for a lot of use cases.

Here’s an example in Ruby:

seed = Random.new_seed() => 142757148148443078663499575299582907518 prng_1 = Random.new(seed=seed) prng_1.rand() => 0.6702742156250219 prng_2 = Random.new(seed=seed) prng_2.rand() => 0.6702742156250219 prng_1.rand() => 0.9667236181962573 prng_2.rand() => 0.9667236181962573If you run this yourself using 142757148148443078663499575299582907518 as the seed, your first two pseudorandom numbers will also be 0.6702742156250219 and 0.9667236181962573, assuming your version of Ruby hasn’t changed its PRNG.