The problem is, that most languages have no native support other than 32 or 64 bit floats and some representations on the wire don’t either. And most underlying processors don’t have arbitrary precision support either.

So either you choose speed and sacrifice precision, or you choose precision and sacrifice speed. The architecture might not support arbitrary precision but most languages have a bignum/bigdecimal library that will do it more slowly. It might be necessary to marshal or store those values in databases or over the wire in whatever hacky way necessary (e.g. encapsulating values in a string).

I know this is in jest, but if 0.1+0.2!=0.3 hasn’t caught you out at least once, then you haven’t even done any programming.

IMO they should just remove the equality operator on floats.

That should really be written as the gamma function, because factorial is only defined for members of Z. /s

While we’re at it, what the hell is -0 and how does it differ from 0?

It’s the negative version

So it’s just like 0 but with an evil goatee?

Look at the graph of y=tan(x)+ⲡ/2

-0 and +0 are completely different.

Call me when you found a way to encode transcendental numbers.

Do we even have a good way of encoding them in real life without computers?

Just think about them real hard

\pi

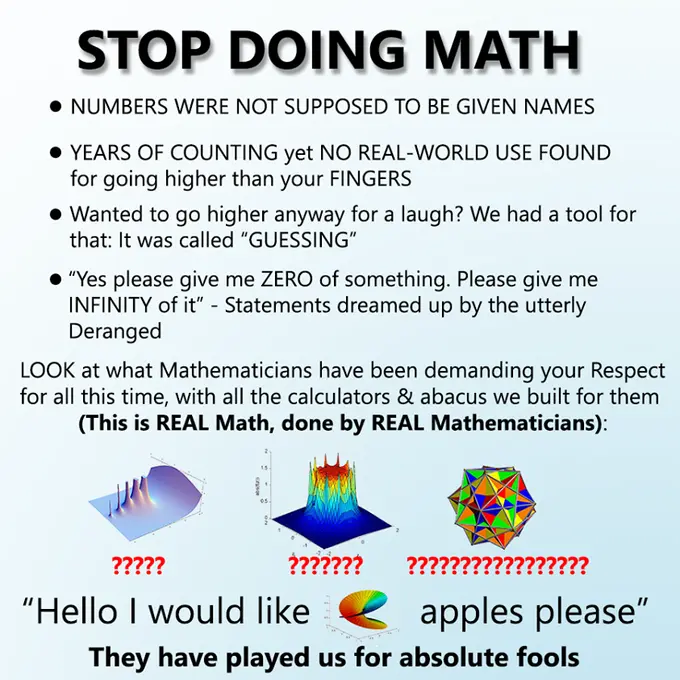

From time to time I see this pattern in memes, but what is the original meme / situation?

It’s my favourite format. I think the original was ‘stop doing math’

math are numbers and therefore non-physical, and therefore esoterical, so stop giving it credit.

/s

Out of topic but how does one get a profile pic on lemmy? Also love you ken.

you can configure it in the web interface. just go to your profile

As a programmer who grew up without a FPU (Archimedes/Acorn), I have never liked float. But I thought this war had been lost a long time ago. Floats are everywhere. I’ve not done graphics for a bit, but I never saw a graphics card that took any form of fixed point. All geometry you load in is in floats. The shaders all work in floats.

Briefly ARM MCU work was non-float, but loads of those have float support now.

I mean you can tell good low level programmers because of how they feel about floats. But the battle does seam lost. There is lots of bit of technology that has taken turns I don’t like. Sometimes the market/bazaar has spoken and it’s wrong, but you still have to grudgingly go with it or everything is too difficult.

IMO, floats model real observations.

And since there is no precision in nature, there shouldn’t be precision in floats either.

So their odd behavior is actually entirely justified. This is why I can accept them.

all work in floats

We even have

float16 / float8now for low-accuracy hi-throughput work.Even float4. You get +/- 0, 0.5, 1, 1.5, 2, 3, Inf, and two values for NaN.

Come to think of it, the idea of -NaN tickles me a bit. “It’s not a number, but it’s a negative not a number”.

I think you got that wrong, you got +Inf, -Inf and two NaNs, but they’re both just NaN. As you wrote signed NaN makes no sense, though technically speaking they still have a sign bit.

Right, there’s no -NaN. There are two different values of NaN. Which is why I tried to separate that clause, but maybe it wasn’t clear enough.

Floats are only great if you deal with numbers that have no needs for precision and accuracy. Want to calculate the F cost of an a* node? Floats are good enough.

But every time I need to get any kind of accuracy, I go straight for actual decimal numbers. Unless you are in extreme scenarios, you can afford the extra 64 to 256 bits in your memory