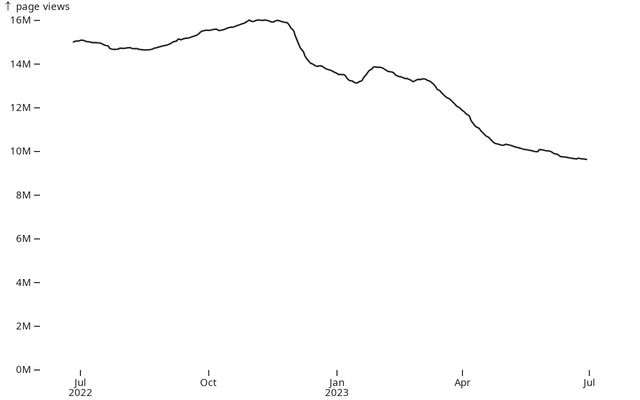

Over the past one and a half years, Stack Overflow has lost around 50% of its traffic. This decline is similarly reflected in site usage, with approximately a 50% decrease in the number of questions and answers, as well as the number of votes these posts receive.

The charts below show the usage represented by a moving average of 49 days.

What happened?

Why is everyone saying this is because Stack Overflow is toxic? Clearly the decline in traffic is because of ChatGPT. I can say from personal experience that I’ve been visiting Stack Overflow way less lately because ChatGPT is a better tool for answering my software development questions.

The timing doesn’t really add up though. ChatGPT was published in November 2022. According to the graphs on the website linked, the traffic, the number of posts and the number of votes all already were in a visible downfall and at their lowest value of more than 2 years. And this isn’t even considering that ChatGPT took a while to get picked up into the average developer’s daily workflow.

Anyhow though, I agree that the rise of ChatGPT most likely amplified StackOverflow’s decline.

Understandably, it has become an increasingly hostile or apatic environment over the years. If one checks questions from 10 years ago or so, one generally sees people eager to help one another.

Now they often expect you to have searched through possibly thousands of questions before you ask one, and immediately accuse you if you missed some – which is unfair, because a non-expert can often miss the connection between two questions phrased slightly differently.

On top of that, some of those questions and their answers are years old, so one wonders if their answers still apply. Often they don’t. But again it feels like you’re expected to know whether they still apply, as if you were an expert.

Of course it isn’t all like that, there are still kind and helpful people there. It’s just a statistical trend.

Possibly the site should implement an archival policy, where questions and answers are deleted or archived after a couple of years or so.

The worst is when you actually read all that questions and clearly stated how they don’t apply and that you already tried them and a mod is still closing your question as a duplicate.

chatGPT doesn’t chastize me like a drill instructor whenever I ask it coding problems.

It just invents the answer out of thin air, or worse, it gives you subtle errors you won’t notice until you’re 20 hours into debugging

I agree with you that it sometimes gives wrong answers. But most of the time, it can help better than StackOverflow, especially with simple problems. I mean, there wouldn’t be such an exodus from StackOverflow if ChatGPT answers were so bad right ?

But, for very specific subjects or bizarre situations, it obviously cannot replace SO.

And you won’t know if the answers it gave you are OK or not until too late, seems like the Russian Roulette of tech support, it’s very helpful until it isn’t

Depending on Eliza MK50 for tech support doesn’t stop feeling absurd to me

How do you know the answer that gets copied from SO will not have any downsides later? Chatgpt is just a tool. I can hit myself in the face with a wrench as well, if I use it in a dumb way. IMHO the people that get bitten in the ass by chatgpt answers are the same that just copied SO code without even trying to understand what it is really doing…

Just like real humans.

Amazing how much hate SO receives here. As knowledge base it’s working super good. And yes, a lot of questions have been answered already. And also yes, just like any other online community there’s bad apples which you have to live with unfortunately.

Idolizing ChatGPT as a viable replacementis laughable, because it has no knowledge, no understanding, of what it says. It’s just repeating what it “learned” and connected. Ask about something new and it will simply lie, which is arguably worse than an unfriendly answer in my opinion.

The advice on stack overflow is trash because “that question has been answered already” yeah, it was answered 10 years ago on a completely different version. That answer is depreciated.

Not to mention the amount of convoluted answers that get voted to the top and then someone with two upvotes at the bottom meekly giving the answer that you actually needed.

It’s like that librarian from the New York public library who determined whether or not children’s books would even get published.

She gave “good night moon” a bad score and it fell out of popularity for 30 years after the author died.

I don’t think that’s entirely fair. Typically answers are getting upvoted when they work for someone. So the top answer worked for more people than the other answers. Now there can be more than one solution to a problem but neither the people who try to answer the question, nor the people who vote on the answers, can possibly know which of them works specifically for you.

ChatGPT will just as well give you a technically correct, but for you wrong, answer. And only after some refinement give the answer you need. Not that different than reading all the answers and picking the one which works for you.

I hear you. I firmly believe that comparing the behavior of GPT with that of certain individuals on SO is like comparing apples to oranges though.

GPT is a machine, and unlike human users on SO, it doesn’t harbor any intent to be exclusive or dismissive. The beauty of GPT lies in its willingness to learn and engage in constructive conversations. If it provides incorrect information, it is always open to being questioned and will readily explain its reasoning, allowing users to learn from the exchange.

In stark contrast, some users on SO seem to have a condescending attitude towards learners and are quick to shut them down, making it a challenging environment for those seeking genuine help. I’m sure that these individuals don’t represent the entire SO community, but I have yet to have a positive encounter there.

While GPT will make errors, it does so unintentionally, and the motivation behind its responses is to be helpful, rather than asserting superiority. Its non-judgmental approach creates a more welcoming and productive atmosphere for those seeking knowledge.

The difference between GPT and certain SO users lies in their intent and behavior. GPT strives to be inclusive and helpful, always ready to educate and engage in a constructive manner. In contrast, some users on SO can be dismissive and unsupportive, creating an unfavorable environment for learners. Addressing this distinction is vital to fostering a more positive and nurturing learning experience for everyone involved.

In my opinion this is what makes SO ineffective and is largely why it’s traffic had dropped even before chat GPT became publicly available.

Edit: I did use GPT to remove vitriol from and shorten my post. I’m trying to be nicer.

I don’t want to compare the behavior, only the quality of the answers. An unintentional error of ChatGPT is still an error, even when it’s delivered with a smile. I absolutely agree that the behavior of some SO users is detrimental and pushes people away.

I can also see ChatGPT (or whatever) as a solution to that - both as moderator and as source of solutions. If it knows the solution it can answer immediately (plus reference where it got it from), if it doesn’t know the solution it could moderate the human answers (plus learn from them).